My Review of Udacity’s Deep Learning Nanodegree

30% discount on Udacity subscriptions with code SECONDCHANCE until December 18th

Hello, and welcome to my review of the Deep Learning Nanodegree from Udacity. I’ve completed seven(!) nanodegrees on Udacity, and this one is my favorite.

Background

I’m a machine learning engineer by training, and today I run a startup applying deep learning algorithms to inspect railway infrastructure. I’ve never attended university, so everything I know comes from hands-on practice, reading books, and online courses.

Deep Learning

Deep learning is a fantastic technology, and it’s a great addition to your skillset as a software engineer, machine learning engineer, or data scientist. I hope you enjoy my review! 😊

Table of content

Summary

Here’s a quick summary in case you run a tight schedule.

Content (5/5)

This course covers all the major topics in deep learning and has a fantastic section about adversarial generative networks from one of the scientists who came up with the entire concept.

Quality (5/5)

If there's one thing that Udacity does better than other course creators, it is the quality of their content. They spend more time and resources on producing beautiful videos, which is perfect for visual learners.

Projects & Exercises (4.5/5)

The exercises and projects are all relevant, but there’s a bit too much boilerplate code for my taste. I prefer to build from scratch when learning something new and would have liked to see more of that.

Affordable (3/5)

Nanodegrees from Udacity cost more than other online courses, but it's a reasonable price for the quality. If the aspects that make Udacity great matter less to you, the price is a little steep.

Overall (4.5/5)

The only downside of this program is that the price is higher compared to most alternatives. If your finances don’t allow for this expense, don’t purchase the course. It’s possible to acquire the same knowledge on your own through reading and dedicated practice.

But if you don’t mind the expense and want to build machine learning algorithms for complex data such as images and text, I recommend the Deep Learning Nanodegree. It’s the best machine learning program on Udacity.

Just remember that no course or education can replace hands-on experience, so make sure to practice your craft with or without this certificate.

Full Review of the Udacity Deep Learning Nanodegree

The Deep Learning Nanodegree has six sections, but the first one is just an introduction and doesn’t have a mandatory project. All the fun starts with part 2, but let’s look at each one of them in detail.

Part 1: Introduction to deep learning

In part 1, the goal is to get you familiar with Udacity. They welcome you to the program, introduce you to their career services, and get you up and running with some Python libraries you need throughout the course.

If your linear algebra is rusty, you can check out the lessons covering essential mathematics of deep learning.

If you have prior experience with Udacity and feel confident with python libraries like NumPy, you can safely skip this part. There’s no project stopping you from heading over to part 2.

One thing to note is that there’s a career lesson introducing you to GitHub. If you don’t have a GitHub account, follow the instructions closely and make the most of Udaicity’s career service.

I can’t stress the importance of a good GitHub profile when looking for work enough. It’s more important than any certificate, degree, or work experience. I would never hire a programmer or machine learning engineer that doesn’t have one.

Part 2: Neural networks

Neural Networks are the bread and butter of deep learning. It’s a family of algorithms initially inspired by the human brain, although no one makes that comparison anymore.

The phrase “Deep Learning” derives from a sub-type of neural networks called “Deep Neural Networks”, where deep means that the algorithm has multiple layers. Today, 99.9% of neural networks have multiple layers, so you don’t have to say deep if you don’t want to.

Understanding neural networks

In the first real lesson of the Udacity Deep Learning Nanodegree, the goal is to understand the inner workings of neural networks. These algorithms can take thousands of shapes, but it’s always the same building blocks.

Just like in mathematics, a solid understanding of the fundamentals makes everything so much easier down the line.

Of course, you can’t learn everything about neural networks in a series of short videos, but the content is excellent. There are around 20 videos and some questions to ensure that you’ve understood the essence.

Implementing gradient descent from scratch

To better understand deep learning, the next lesson teaches you to implement neural networks from scratch using NumPy.

Without any popular frameworks like PyTorch, you must implement concepts such as backpropagation yourself. You can do that for simple networks, but it becomes complex quickly with advanced architectures.

It’s probably the only time in your life that you build a deep learning algorithm without a deep learning framework. I don’t think this exercise is necessary, but it’s a decent way to understand what happens during training.

Udacitiy’s goal with these lessons is just to make sure that you fully grasp gradient descent and backpropagation.

How to train a neural network

Now that you know how neural networks function, the next step is to study best practices for training them. This lesson covers critical concepts such as early stopping, local minimums, learning rates, and different optimizers.

You have a long way to go before you become a deep learning mastermind, but what you get here is an excellent walkthrough of concepts that you will encounter all the time. You can now continue to expand your understanding with a better idea of what happens behind the scenes.

Your first Nanodegree project

To finish the first section, you need to take what you learned in the lessons and train a Numpy algorithm on occupancy data from a bike-sharing service. If you did all the exercises, this project doesn’t take long.

Overall, I think this first part of the deep learning nanodegree does a great job of introducing students to neural networks. Whenever you want to learn something, it’s invaluable to have a solid understanding of the basics.

Part 3: Convolutional neural networks

We use convolutional neural networks (CNN) primarily for images and video, but you can utilize that architecture for many complex data types.

The fundamentals of CNN’s

The walkthrough on how CNN’s work is top-notch. It’s very visual, and you only need to see it once to never forget how the main building blocks function.

Once you’ve learned everything about convolutional layers, strides, padding, and pooling layers, this part of the Nanodegree becomes even better by introducing some really cool and useful concepts.

Introduction to transfer learning

First, we have transfer learning, where you use a pre-trained network as the starting point for your algorithm. This technique saves you many hours of training and almost always leads to better performance.

Also, some of the pre-trained algorithms you have access to cost millions of dollars to train, so transfer learning is certainly something you’ll use frequently.

You can use transfer learning in more applications than computer vision. However, this is where it’s most common. Starting with a pre-trained network is something you must learn. Luckily, it’s astonishingly simple and requires only a few lines of code.

Lesson 5 in the section about convolutional neural networks talks about weight initialization. However, they forget to mention that the most common way to initiate your weights is through transfer learning because you often retrain the entire algorithm, not just the output layer.

Learning about Autoencoders

Autoencoders is a type of architecture that allows you to compress the original input and expand it back to something of the same size. It’s a versatile technique that you can use to generate made-up images, image segmentation, compression, etc.

Autoencoders pop up often in research, especially when it comes to generative networks. Having a solid understanding of this architecture allows you to tackle hundreds of exciting applications.

Creating something beautiful with style-transfer

Style transfer is perhaps as valuable as autoencoders or transfer learning, but it’s just as fun. The idea is to use a pre-trained network and extract stylistic information from one image and transfer that to another image. For example, turning a family portrait into an oil painting.

In the video lessons, you’ll learn how to perform style transfer using intermediate layers of a VGG network. You get an excellent visual explanation of calculating the style loss using gram matrices. Pay attention because that’s a functional loss for any generative network.

Project: Classifying dog breeds in images

Your project is to build a convolutional neural network to classify dog breeds. That’s perhaps not the most inspiring project. Given the variation of previous lessons, I was hoping for something more challenging. Although, I guess that everyone must master the simple classifier.

There were many exciting topics in section 3, so it feels a bit sad to have a project with image classification. I would have preferred something a bit more exciting.

Part 4: Recurrent neural networks

Recurrent neural networks are frequently used to work with sequential data such as stocks and text. In this section, you’ll learn more about these algorithms as well as some alternatives.

Understanding recurrent neural networks

As a starter, you’ll learn about the original architectures that have dominated sequential modeling for many years. It includes a deep dive into standard RNNs, LSTMs, and GRUs.

There’s also an additional set of lessons that teaches you how to apply this type of deep learning algorithm in practice.

I don’t encounter these types of RNN’s as much in recent years, but it’s certainly good to understand how they work.

RNN’s for natural language processing

To build an algorithm for text, you’ll also learn how to create word embeddings using methods such as Word2Vec. We use embeddings to represent words as a vector of continuous numbers, which allows us to capture information about the relationship between different words.

There are several methods for converting text to a numerical format. Word2Vec is one of the first that managed to capture relationships between words.

Next, you’ll look at one of the most common applications for natural language processing; sentiment analysis.

Sentiment analysis is a critical feature these days on social media platforms like Twitter. It’s a critical weapon against misinformation and similar challenges.

Project: Generating TV-scripts

In the project, your task is to generate “fake” TV scripts based on actual scripts from Seinfeld. You’ll train the algorithm to predict the next word in a sequence based on real TV scripts and use that model to create new ones automatically.

A fun use-case, but again to much boilerplate which makes it hard to understand what you’re actually doing.

Attention is all you need

A couple of years ago, the entire field of natural language processing changed with the paper Attention is all you need. Today very few people use LSTM’s for text. Luckily there’s a lesson about attention.

This is a good section, but it would be nice to update it with more modern information about transformers and attention. I liked the previous part of convolutional neural networks better.

Part 5: Generative adversarial networks

This section was by itself reason enough to purchase the Deep Learning Nanodegree. Generative adversarial networks are exceptionally fun to work with and have become essential for many applications.

The idea is to train two networks at the same time. The Generator creates content, and the Discriminator tries to separate the generated content from real examples. They are basically competing against each other.

This setup allows you to train algorithms on tasks where it’s hard to measure the error in a standard way. You can think of the discriminator as a complex and dynamic loss function.

To make it even better, the first teacher in this section is no other than Ian Goodfellow, the inventor of GANs.

Let’s take a closer look at the actual content.

GAN fundamentals

Most people taking the course are likely unfamiliar with generative adversarial networks. The goal of the first lesson is to explain the reasoning behind this research and to improve your understanding of how it works.

Next, you’ll learn more about GANs using deep convolutional networks. I’ve never seen any other types of GANs, but I’m sure they exist. It’s probably because I work almost exclusively with visual data.

What impresses me about Udacity is that I seem to remember what I learned for longer compared to other course platforms, and that’s certainly the case for this section. It’s all about the beautiful visualizations.

Pix2Pix & CycleGAN

As always with machine learning research, a new concept leads to additional papers that build on top of the original. Pix2Pix and CycleGan are two such examples.

I don’t have much to add other than that I really enjoyed this section of the Udacity deep learning nanodegree.

Project: Generating fake faces

In the project, you’ll develop a standard GAN for generating fake faces. It’s a fun project because generating faces is common in research as well.

Before I participated in this Nanodegree, I didn’t know anything about GANs. Now, it’s something that I work with weekly. Commonly, you add an adversarial loss to some other simpler loss function to make the algorithm produce something that looks more realistic.

Part 6: Deploying a model

Deploying and maintaining algorithms is just as important as creating them. We focus heavily on this type of software-related aspect of machine learning at my company, so I am glad that this section exists.

You’ll learn how to deploy and update an algorithm using Amazon SageMaker. I’m not a fan of SakeMake myself, but I guess it’s a decent first option for a lot of people.

There is certainly room for improvement, but it’s difficult for Udacity to go into depth on model deployment. You can deploy your algorithm in so many different ways, and the correct choice depends on the situation.

Pros & Cons

So far, this is the best Udacity Nanodegree that I’ve completed, but there’s also room for improvement. Here are my key takeaways.

What I loved about the deep learning nanodegree

Let's start with the positives

Excellent visual presentation of complex topics

One thing that stands out when you compare any program on Udacity with other platforms is that they put more effort into visuals.

Instead of being satisfied with a graph or a drawing, they use beautiful colors and great design to make the lessons memorable. I still recall several such visualizations from other Udacity programs that I finished years ago.

If you’re like me, well-designed visuals significantly improve the learning experience, and the knowledge sticks for longer.

Great range of topics in the curriculum

The second thing specific to the deep learning nanodegree rather than Udaicty as a learning platform is the scope of the curriculum.

Deep learning is such a versatile technology, and the applications are endless. It’s challenging to create a program that prepares students for everything. The course covers several exciting concepts, such as style transfer, autoencoders, and cycleGAN, without becoming too shallow.

The nanodegree prepares students for actual work

Udacity targets people who want to start a new career. To make that possible, they must ensure that the graduate students can manage a junior position.

I believe that they succeed with that goal in the deep learning nanodegree. You won’t be an expert, but you’re ready to take on a challenge. By investing in a nanodegree from Udacity, you’ve already proven that you’re serious.

Remember that learning isn’t the same as watching all videos and doing projects. I’m saying that if you understand the content and can apply it outside of this course, you are eligible for a job as a deep-learning engineer.

The section about GANs

Lastly, I want to emphasize the greatness of the fifth section that teaches you about generative adversarial networks. It’s fantastic that Ian Goodfellow, one of the masterminds behind the entire concept, joined for a couple of videos.

Things I didn’t love

And a few negatives.

To much boilerplate code

Most projects and exercises contain a lot of boilerplate code, so you never need to write everything yourself. While this might be comfortable, it’s not what the reality looks like.

It would help if you worked on some of your own problems to get more practical experience with building algorithms. Otherwise, you might be dumbstruck if you’re given an assignment and a blank sheet of digital paper.

The section deploying models

As mentioned previously in this deep learning Nanodegree review, the section about deploying models isn’t perfect. It feels like they included this part because they needed to have something on that subject but haven’t given it as much love as the other sections.

I understand that it’s hard to do an in-depth section about deployment because of the many alternatives, and I’m not sure what they can do that’s better.

Who benefits from this nanodegree?

The course is perfect for programmers who want to add deep learning to their toolkit. It’s also great for machine learning engineers who wish to learn new concepts or solidify the essentials.

You don’t need machine learning experience to take this course, but it’s good to be comfortable with Python. Even if you have a lot of experience with deep learning, like me, you’ll likely learn something new from this course.

Can I work as a deep learning engineer after finishing this online course?

No, but hear me out.

There are no online courses about programming or machine learning that can replace hands-on practice.

Becoming a great machine learning engineer takes a long time. However, programs such as this one can accelerate your learning curve by ensuring that you have a solid foundation to start from.

I want to emphasize that this is true for traditional education as well. I’m just as unlikely to hire a university-educated person as someone with Udacity Nanodegree if they can’t show me a great Github account. Programming is a craft, and the only way to prove your skills is to show what you’ve built.

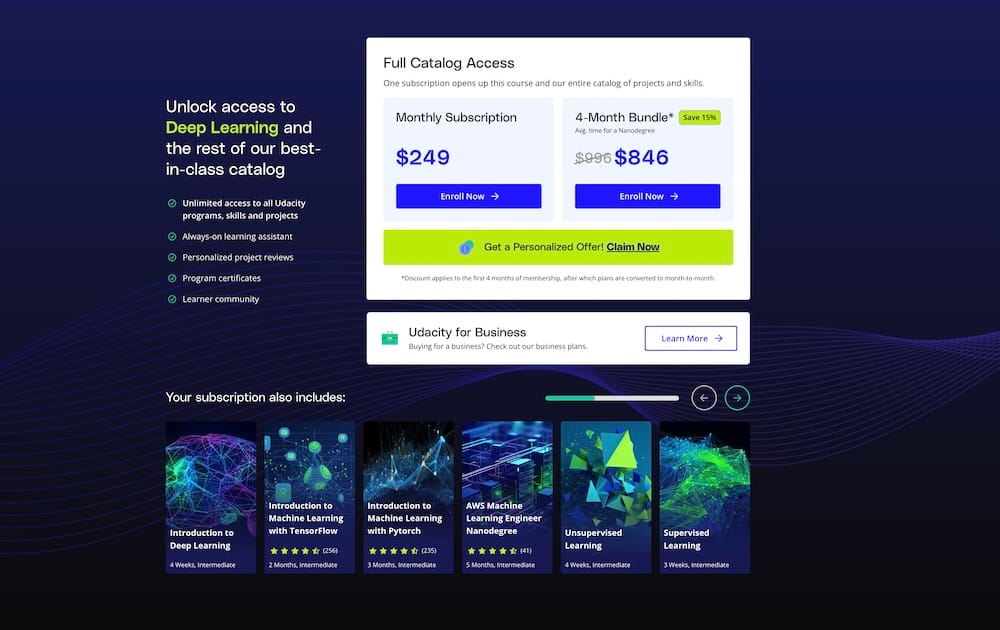

Is the Udacity Deep Learning Nanodegree Worth the Cost?

Udacity courses cost more than most other online programs, so the price is an essential topic for this Deep Learning Nanodegree review.

Currently, the price to enroll is $249 per month, but you get access to their entire catalog. It's still a high price compared to most other online course platforms, but it's a good investment if you're a visual learner and if your finances don't take too much of a hit.

It always comes down to your own situation. Are you comfortable with spending $300 a month on an online education? If the answer is yes, this is certainly a great option.

You can learn anything you want for free, but it might take longer. And even if you purchase this Nanodegree, it doesn't replace the need for hands-on practice.

Personal discounts

It's possible to get a discount on your first month of learning. To get the most from your investment, clear your calendar, grab the discount, and finish the course in a month.

Also, when you finish the Deep Learning Nanodegree, you continue to have access to the content when you cancel your subscription.

Thank you for reading, and see you next time! 😄